What is Re-Act? Re-Act (Reason + Act) is a paradigm that offers to combine reasoning and action in the work of the language model (ReAct: Synergizing Reasoning and Acting in Language Models).

Unlike other approaches to building agents, where the model either only reasons, performs only actions, or immediately gives an answer, Re-Act forces the model to alternate logical reasoning with calls to user functions (or tools – Tools) to interact with the outside world. The model generates a sequence of thoughts and actions, gradually moving towards solving the problem. This combination allows AI to simultaneously consider a solution and, if necessary, refer to tools or external data.

A small disclaimer: Re-Act is not a silver bullet for building AI agents, some kind of only correct approach, it is rather one of many. At the end of the article, I will provide links to examples of other approaches.

Components of the Re-Act Agent:

- The language model (LLM) is the “brain” of the agent that generates decision steps. It releases thoughts (reasoning traces) and indicates actions to perform

- Tools are external functions or APIs that an agent can call to receive information or perform operations. For example, Internet search, calculations, working with the database, etc. Tools are registered with names and descriptions in natural language so that the model knows how to use them.

- Agent Executor – makes a model decision about the action, calls the appropriate tool and returns the result back to the model. In the context of Re-Act, this component implements the cycle “Thought → Act and Observe”.

- Agent state (State) – accumulated history of dialogue and actions. This includes user request, model messages (thoughts/actions) and observations (tool results). The status is constantly updated as the steps are completed.

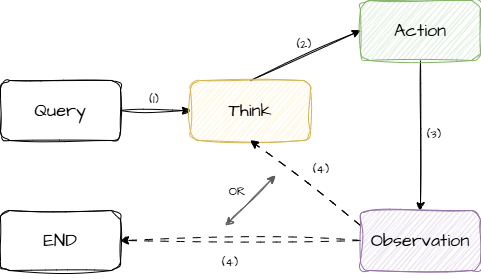

Re-Act agent work cycle

- Receiving a request (Query). The agent receives the user’s input message, which becomes the initial state.

- Generation of reasoning and action (Think). The language model based on the current state generates a conclusion consisting of:

- Thought: internal reasoning, where the agent analyzes the task and forms a plan.

- Action: instructions to perform a specific action (for example, calling a tool with certain arguments).

- Action execution. If the generated output contains a command (for example,

Action: Search["query"]), the performer calls the appropriate tool and gets the result. - Status update (Observation). The result of the tool is saved as an observation and added to the interaction history, after which the cycle is repeated: the updated state is transferred to the model for the next iteration.

- Completion (END). If the model has generated a final response (for example,

Answer: ...), the loop is interrupted and the response is returned to the user.

Re-Act agent work cycle

Internal reflections and Re-Act approach

One of the key features of the Re-Act architecture is the agent’s ability to conduct an internal dialogue, which we designate as “Thoughts”. This process includes the following aspects:

- Analysis and planning. The agent uses internal reasoning to analyze input information, decompose complex tasks into controlled steps and determine further actions. This can be seen as a kind of internal “brainstorm”, where the model builds a solution plan.

- Examples of reasoning:

- Planning: “To solve the problem, you first need to collect the initial data, then identify the patterns and, finally, prepare a final report.”

- Analysis: “The error indicates a problem with the connection to the database, most likely it is incorrect access settings.”

- Decision-making: “The best option for a user with such a budget is to choose a medium price segment, as it offers the best balance between price and quality.”

- Problem solving: “Before optimizing the code, it is worth measuring its performance to understand which sections work the slowest.”

- Memory integration: “Since the user previously said that he prefers Python, it is better to give code examples in this language.”

- Self-reflection: “The previous approach was ineffective. I’ll try a different strategy to achieve the goal.”

- Setting goals: “Before starting the task, it is necessary to determine the criteria for successful completion.”

- Prioritization: “Before adding new features, you must first eliminate critical security vulnerabilities.”

An example of the Re-Act agent’s work

Let’s consider the simplest example of the Re-Act agent’s work. In our example, let’s say the user wants to transfer 100 dollars into euros at the current exchange rate.

In a simple implementation (uses “under the hood” tavily to search the Internet and python REPL to run arbitrary python code) the communication promt with the model will look like this:

Answer the following questions as best you can. You have access to the following tools:

python_repl(command: str, timeout: Optional[int] = None) -> str – A Python shell. Use this to execute python commands. Input should be a valid python command. If you want to see the output of a value, you should print it out with print(…).

tavily_search_results_json – A search engine optimized for comprehensive, accurate, and trusted results. Useful for when you need to answer questions about current events. Input should be a search query.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [python_repl, tavily_search_results_json]

Action Input: the input to the action

Observation: the result of the action … (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: How much will 100 dollars be in euros at the current exchange rate?

Thought:

And an example of what will respond LLM in the first iteration of the Think -> Action -> Observation loop:

Thought: To find the answer, we need to know the current exchange rate between dollars and euros.

Action: tavily_search_results_json

Action Input: “give me current exchange rate dollars euros”

As we can see, LLM thought (Thought) that first she needs to get the current course. And I decided to call tavily to find him (Action).

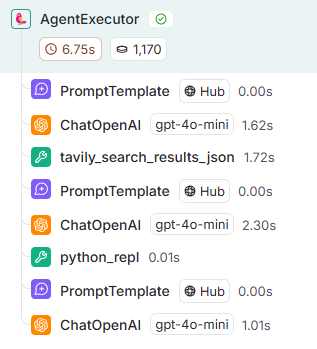

The full cycle of work of agents to answer the question from this example will look like this:

First, the model recognizes the current rate using tavily (tavily_search_results_json), then it offers to call python_repl to convert $100 into euros and gives an answer at the output:

The result of the calculation shows that 100 US dollars will be converted into approximately 95.7 euros at the current exchange rate.

Final Answer: 95.7 euros

Python code based on LangGraph for such an example will look something like this:

from langchain import hub

from langchain.agents import AgentExecutor, create_react_agent

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.tools import Tool

from langchain_openai import ChatOpenAI

from langchain_experimental.utilities import PythonREPL

python_repl = PythonREPL()

repl_tool = Tool(

name="python_repl",

description="A Python shell. Use this to execute python commands. Input should be a valid python command. If you want to see the output of a value, you should print it out with `print(...)`.",

func=python_repl.run,

)

llm = ChatOpenAI(model_name="gpt-4o-mini")

tools = [repl_tool, TavilySearchResults(max_results=1)]

prompt = hub.pull("hwchase17/react")

# Construct the ReAct agent

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

agent_executor.invoke({"input": "How much will 100 dollars be in euros at the current exchange rate?"})Details of the implementation of Re-Act agents

Moving from theory to practice, it should be said that the practical implementation in different frameworks differs from the one proposed in the original article (ReAct: Synergizing Reasoning and Acting in Language Models), as it was published relatively long ago and the development of LLM has stepped far forward during this time.

For example, here are some interesting details of the implementation of the pre-build agent from the LangGraph library:

- LangGraph uses the tool-calling (function-calling) technique (https://platform.openai.com/docs/guides/function-calling) so that LLMs decide to call user functions, while the article used raw text prom and parsing for this, which LLM gave out in the answer. This is due to the fact that at the time of writing the tool-calling built into LLM did not yet exist. Now many modern LLMs (even open source and on premise) are specially trained in the technique of calling functions (for example, tool calling in Qwen).

- LangGraph uses an array of parameters to call tools instead of passing all parameters as one large string. Again, it is due to the limitations of LLM, which existed at the time of writing.

- LangGraph allows you to call several tools at once.

- Finally, the article clearly generated the Thought stage before deciding which tools to call. This is part of Reasoning in Re-Act. LangGraph does not do this by default, mainly because LLM capabilities have improved significantly, and this is no longer so necessary.

The backend for AI agents often uses microservices — see patterns for microservices communication with Kafka.

Pros and cons of Re-ACt

Re-Act is not a universal solution and has its pros and cons.

Pros:

- Flexible step-by-step solution. The agent independently plans and carries out a series of actions. It can choose from a variety of tools and perform several steps while saving the context using memory. This allows you to dynamically solve complex problems, breaking them down into subtasks.

- Ease of development: Re-Act agents are relatively easy to implement, as their logic is reduced to the “think-action-observation” cycle. Many frameworks (for example, LangGraph) already offer ready-made Re-Act implementations.

- Universality. Re-Act agents are applicable in a wide variety of domains – from information search to device management – thanks to the ability to connect the necessary tools.

Cons:

- Low efficiency in some scenarios: Each step requires a separate LLM call, which makes the solution slow, long and expensive for long chains. Each action (tool call) is accompanied by model reflection, increasing the total number of calls to the model.

- No global plan: The agent plans only one step forward on each iteration. Due to the lack of a holistic plan, he can choose suboptimal actions, go to dead ends or repeat himself. This makes it difficult to solve problems that require a strategic review of several steps at once.

- Limited scalability: If an agent has too many possible tools or a very long history, it becomes difficult for him to choose the right next action. The context and number of options may exceed the ability of a single model to effectively manage them, which reduces the quality of the results.

Examples of other approaches to building agents

As I said, Re-Act is not the only and best way to build AI agents. Moreover, even the official original article compares Re-Act and CoT and the results show that the “vanilla” Re-Act does not always win.

Now hybrid approaches are often developed, which use variations and combinations of several ways of building agents. For example, LangGraph allows you to create arbitrary graphs of agents and multi-agent systems.

I will give examples of other ways to build agents and promting, with which Re-Act competes or can be combined:

- Chain-Of-Thought (CoT) – link

- Self-Ask Prompting – link

- Self-Discover – link

- Tree-Of-Thought (ToT) – link

- Language Agent Tree Search – link

- Reflexion – link

- MRKL Systems (Modular Reasoning, Knowledge and Language) – link

- PAL (Program-aided Language Models) – link

- Toolformer – link

- Agents with planning:

- Planning and execution (Plan-and-Execute) – reference 1, reference 2

- ReWOO (Reasoning WithOut Observations) – reference 1, link 2

- LLMCompiler – reference 1, link 2

- Multi-Agent Systems:

📖 AI agents are the future of intelligent systems. For the full picture, read our AI & Machine Learning Development Guide — covering agentic workflows, SLMs, and autonomous AI systems.

About The Author: Yotec Team

More posts by Yotec Team