Recently, my team had two goals:

● Improve the quality of user and business interaction through audio data analytics.

● Reduce the time for routine with colleagues.

That’s how two projects were born 👇

● Summarization (from English “summary”) of Zoom conferences. After the end of the conference, participants receive a protocol with the main theses of the conversation.

Anticipating that in the comments they will ask a question about a similar function in Zoom itself, I will say that we specifically wanted to develop our own solution. The risk of losing access to an external product is too high today.

● Dashboard of appeals. Helps to analyze calls to UMoney call centers and identify the most common user problems.

The most popular tasks in audio

● STT (speech-to-text) – make audio text (speech recognition).

● TTS (text-to-speech) – convert text into speech.

● Audio classification – classify sound. This is when we allocate a pattern or a group of certain sounds on similar audios. For example, you can recognize the species of a bird by its singing.

● Source Separation – divide sounds by sources. For example, when an orchestra plays and you need to highlight a specific instrument.

● Diarization – divide the dialogue into the speech of individual speakers.

● Voice Activity Detection – determine the presence of speech in some part of the audio recording.

● Audio Enhancement – improve sound quality.

Zoom call sammarization

Let’s divide this task into several subtasks:

● Transcription – pull out the text from the audio.

● Diarization – we determine which speaker is speaking by timecodes.

● Identification – we identify the performing person.

● Sammarization – create a summary of the record.

We use Whisper from OpenAI for transcribing. There are many solutions on the market, but this model shows the best results when working with audio in all languages. It has a good library with documentation, it is clear how to work with hyperparameters and how to configure them.

Whisper consists of a Transformer block that includes Decoder and Encoder blocks. Encoder receives a spectrogram at the input, Decoder – special tokens (language, task, timecode), as well as transcribed ones (to predict the next tokens).

Whisper (OpenAI) Scheme

Hyperparameters

Help to increase the quality of transcribing in projects. Here are the most important of them:

● initial_prompt — context. This is a string in which you can specify domain-specific terms – those that most likely did not come across by Whisper in the training sample. In this case, Whisper will learn to predict them well.

● language — audio language code. It is not necessary to specify the language code, but it is better to do it.

● task – task type, for example, transcribe or detect.

● condition_on_previous_text is a hyperparameter that throws information from the previous chunk (fragment). So the context of the next one will be observed when transcribing.

● beam_size is a hyperparameter that allows you to increase the number of hypotheses that we validate when predicting the next token.

● temperature – the temperature for sampling, the default value is 0. Beam size works only at zero temperature. If the temperature is higher, you need to use the best_of hyperparameter. Temperature is responsible for the distribution of tokens: when our model predicts the next one, it builds distributions and chooses the most likely one from them. The higher the temperature, the more uniform the distribution will be.

● length_penalty — penalty for the length of the sequence. The default is 0, the range is from -1 to +1. The smaller the length_penalty, the shorter the transcriptions will be, the longer the longer.

Diary

The next task in our pipeline is diarization, or the division of the audio recording by speakers.

The diarization process is as follows:

● Whisper solves the next speaker prediction problem inside. This allows us to conveniently design our own diarization.

● Next, we make voice embeddings from each chunk and clustering. With such clustering in Whisper, you need to specify the hyperparameter word_timestamp=True to get the exact timecodes of each word.

As a result of diarization, we get timecodes with speaker numbers.

Identification

We compare the resulting clusters with data from the internal voisbank or with a vectorized multichannel record. Voys-bank is a vote database, where the key is the name of the employee, and the value is the vector of his voice. After comparing, we take the top by cosine similarity (cosine similarity).

- As soon as we receive the audio, it is converted to WAV format with a sampling rate of 16 kHz.

- Then the audio is processed, preprocessing takes place. For example, noise is removed from the recording and the volume is normalized.

- Chanks (fragments) are created for 25-30 seconds. The separation occurs when the amplitude falls, so as not to cut the words. Whisper itself can break the audio into fragments, but it has a feature – it can switch to another language or get stuck in one chunk.

- Transcribing is performed using Whisper. In response, he gives out the text removed from the audio.

- Diarization is carried out using the pyannote library (it does not work very well without additional training) or its own clustering due to Whisper outputs.

- Output of diarization – we get time codes and speaker numbers.

- Identification – we learn the names of the speakers from the numbers.

Examples of transcription and diarization outputs

Sammarization

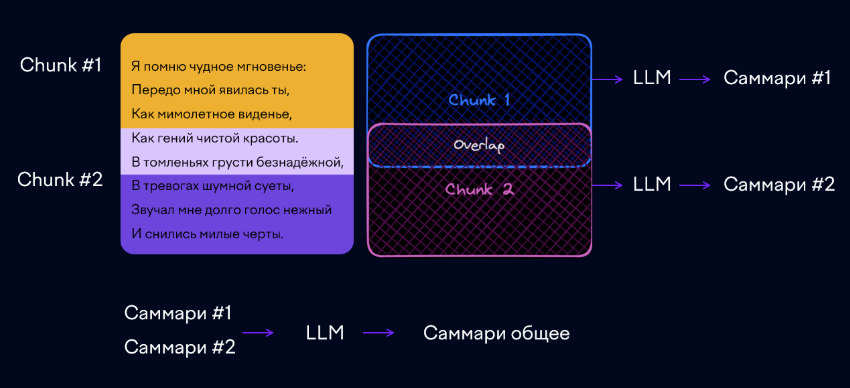

At the stage of sammarization, we have information about what each speaker said. This information must be transferred to the large language model (LLM). But due to the limited input context of LLM, we can’t always transfer everything, so we need to cut the text into small fragments, while trying to keep the essence of the paragraph within one chunk (use semantic splitting).

Each chunk is sent to LLM with a system request for sammarization. Then we collect all the sammari and ask LLM to make one common one based on them.

The result of sammarization

That’s what happened when the presented audio recording went through our pipeline.

Also at the output we received a full transcript (decryption) of the audio.

Metrics

We track three main indicators:

1. Transcribing: measure WER (Word Error Rate) and CER (Character Error Rate).

Example: there is a sentence: “Mom washed the frame”. Let’s try to transcribe it with an error in the first word: “Mom washed the frame”. In this case, WER = 1/3, since one word out of three is transcribed incorrectly. CER will be equal to 1/12.

2. Summarization: we use the ROUGE-N metric, where N means N-grams – it is a sliding window by symbols or words.

Example: for a phrase from the previous example about mom with a size of N-grams 2, we get three N-grams: MA, AM and MA. With a size of N-grams 3, we get two N-grams – MAM and AMA.

Thus, we have a reference sammarization, which was done by assessors, customers or even ourselves. We only measure the intersection of N-grams between the generated and reference sammarization.

3. Classification (for identification): we use standard metrics F-score, accuracy, precision and recall.

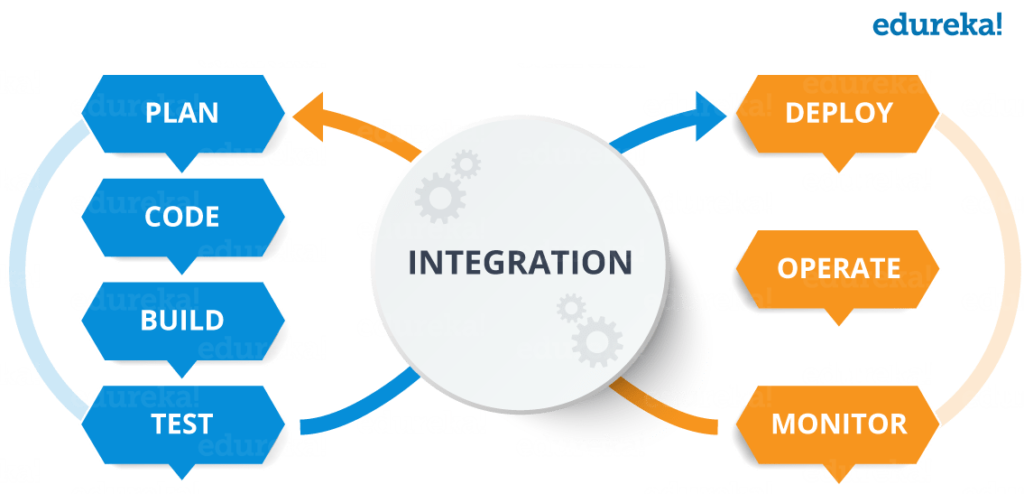

Dashboard of appeals

We also analyze calls to our call centers. When a client calls, we extract the subject of his request, and it is displayed on the dashboard. This allows you to track bursts of activity on different topics. The dashboard can be updated daily and weekly to track the dynamics of indicators and solve user problems.

This is what our dashboard looks like:

About The Author: Yotec Team

More posts by Yotec Team