In this article, we will give a simple example of using ChatGPT in machine learning, suggest several ways to use ChatGPT for solving real-world problems, and also highlight points in which it is completely inapplicable.

Many of us have seen a lot of news and various articles about ChatGPT – a neural network with which you can talk, ask questions, and also ask her to help in writing code. For more than a month I have been trying to use it for work tasks and the deeper I study, the more I discover its hidden possibilities.

And since the disclaimer “this article was written entirely with ChatGPT” is no longer something new, then, according to tradition, let ChatGPT itself speak about itself:

“ I am ChatGPT, the largest language learning model built by OpenAI to provide answers to a variety of questions and engage in conversations on a variety of topics. I am trained on a huge amount of textual data and can generate human-like responses using natural language processing techniques . ”

Further, we will refer to it in English. This makes it easier to ask questions related to a specific code implementation.

Building the foundations

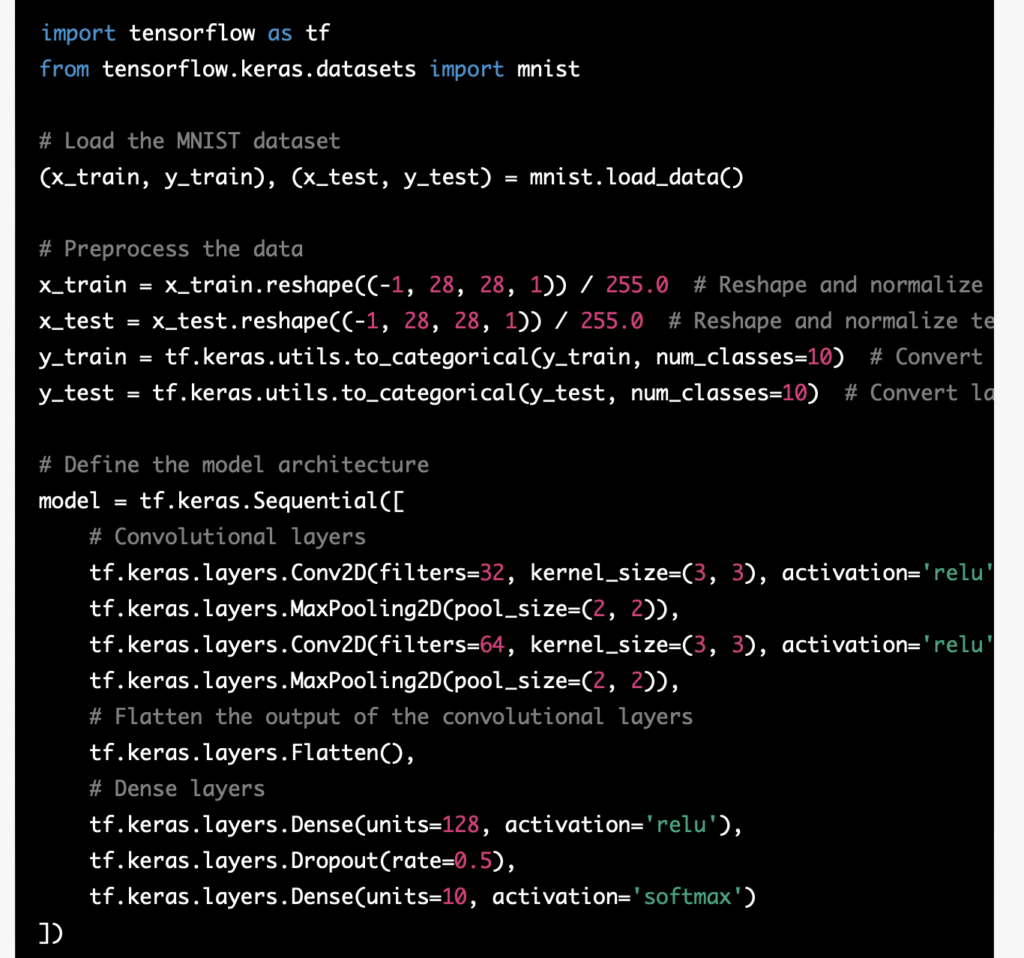

Let’s start our way to solving real problems using ChatGPT with the simplest example of building a training pipeline for an image classification problem. In this case, we want to get the foundation, the simplest pipeline framework, which we will further improve. Let’s take the standard MNIST problem as an example. The first step is to ask ChatGPT to create a basic model for image classification:

“Construct a CNN model for the MNIST task”

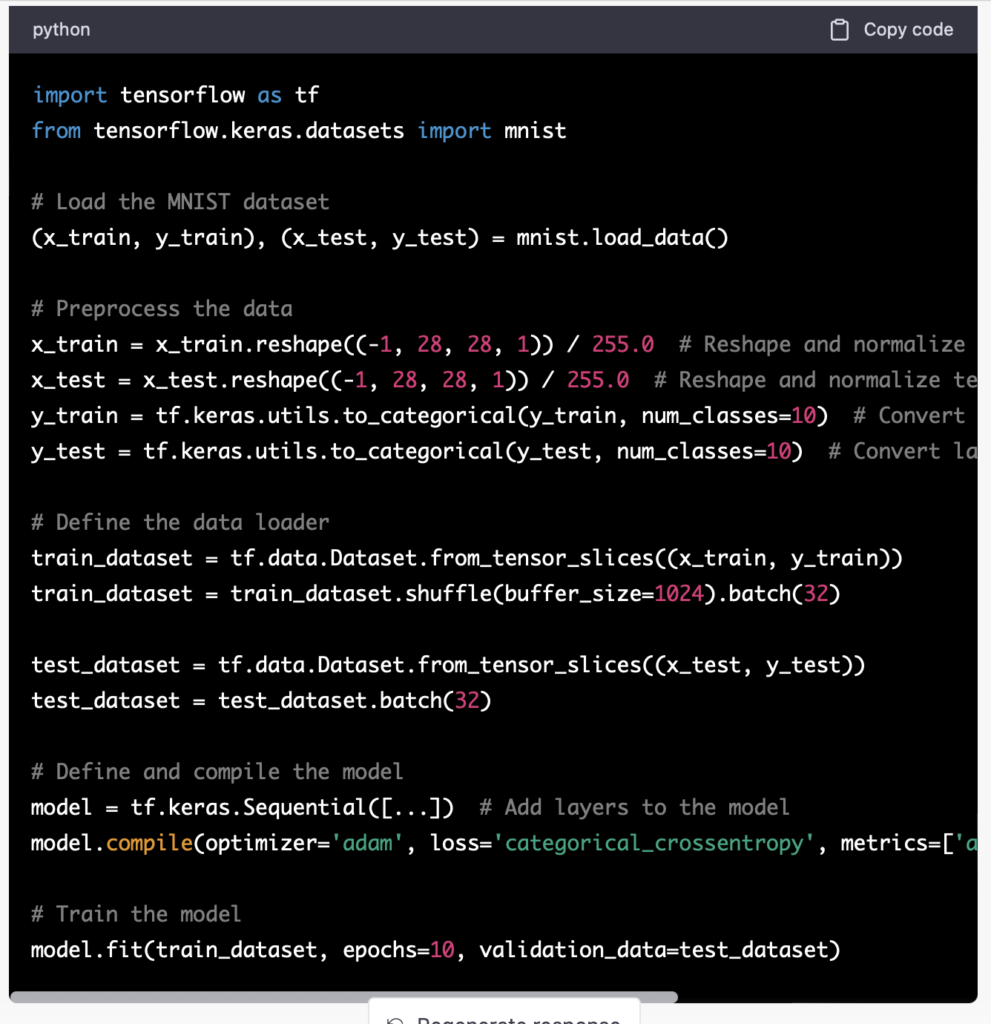

Now that we have the model ready, let’s ask to create an example training pipeline.

- “Give me a short baseline example of tensorflow data loader for image classification task using MNIST as an example”

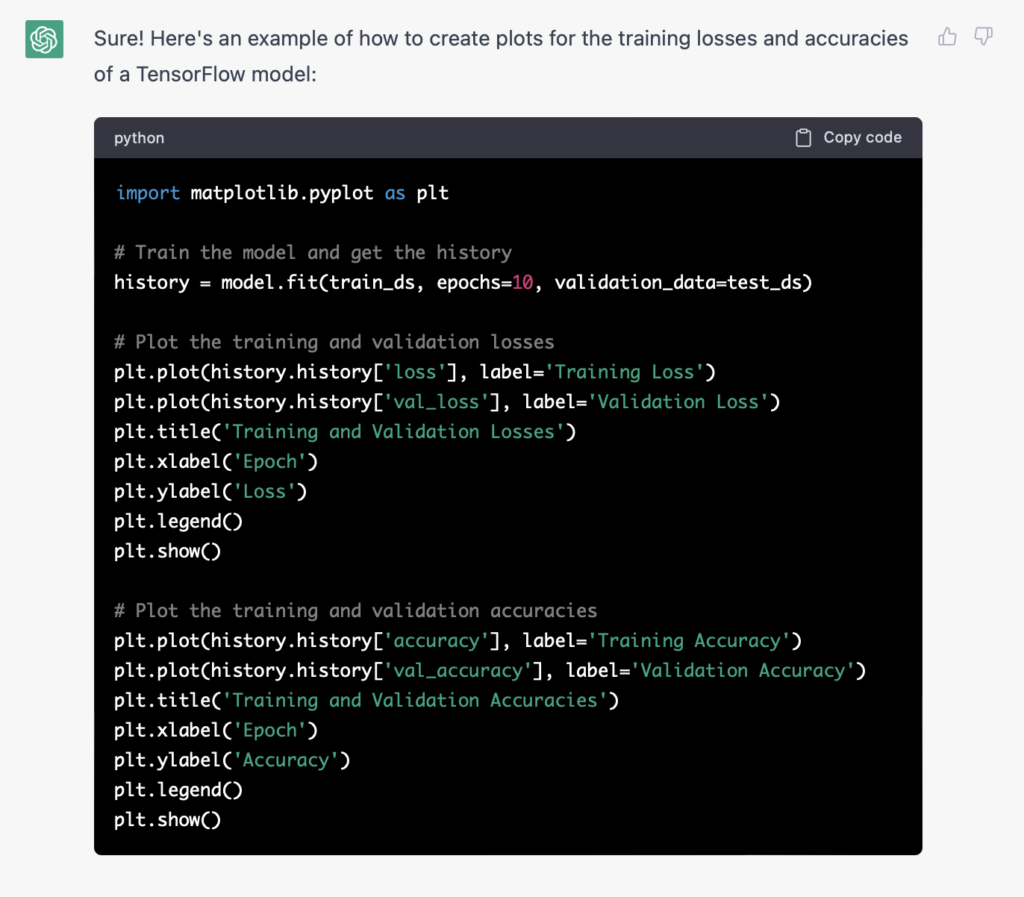

At the end, ask her to prepare training schedules.

- “Create plots for training losses and accuracies”

So in a few steps, using only ChatGPT, we got the basis of the training pipeline using the example of the MNIST dataset. Now it’s time to start tasks that are closer to reality.

Response improvement

In most cases, ChatGPT does a great job with standard queries, but how do you get a quality answer for more complex questions?

- Iterative questions

ChatGPT can answer sequentially asked questions, focusing on the previous conversation in the context of one dialogue. In the event that you are not completely sure what answer you want to receive or what specific question to ask, you can consistently approach the desired result.

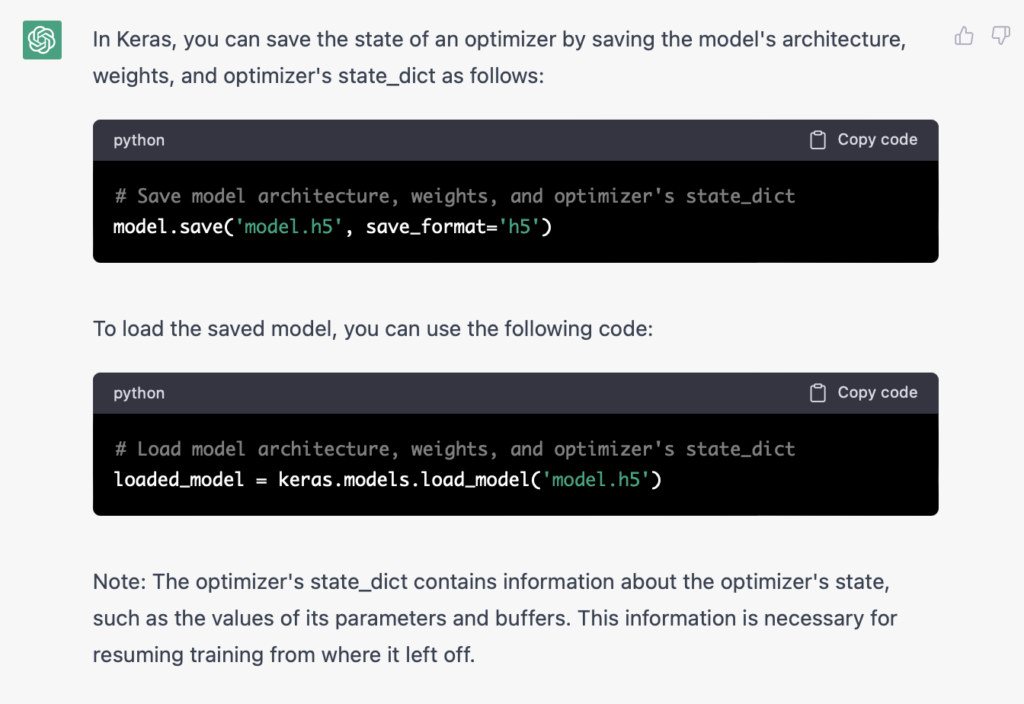

Let’s take a look at this technique with an example. Let’s say at a certain stage of training we want to save the state of the optimizer in order to use it for another neural network model.

- “How to save the state of an optimizer in a keras model?”

Here we can see the standard response. However, in this task, the goal is to get exactly the state of the optimizer, and not the entire model.

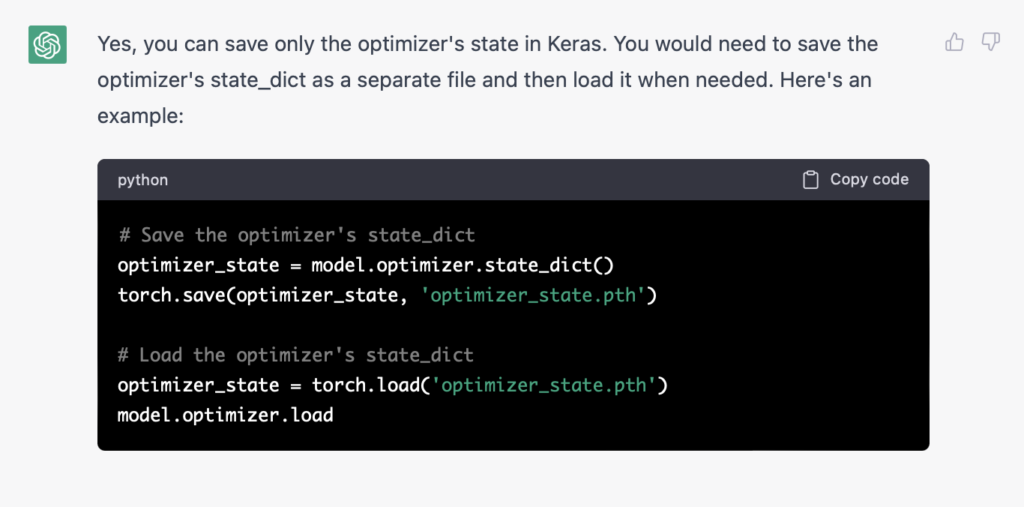

Therefore, in order to get the answer we need, we ask a clarifying question:

- “Can I save only the state of the optimizer?”

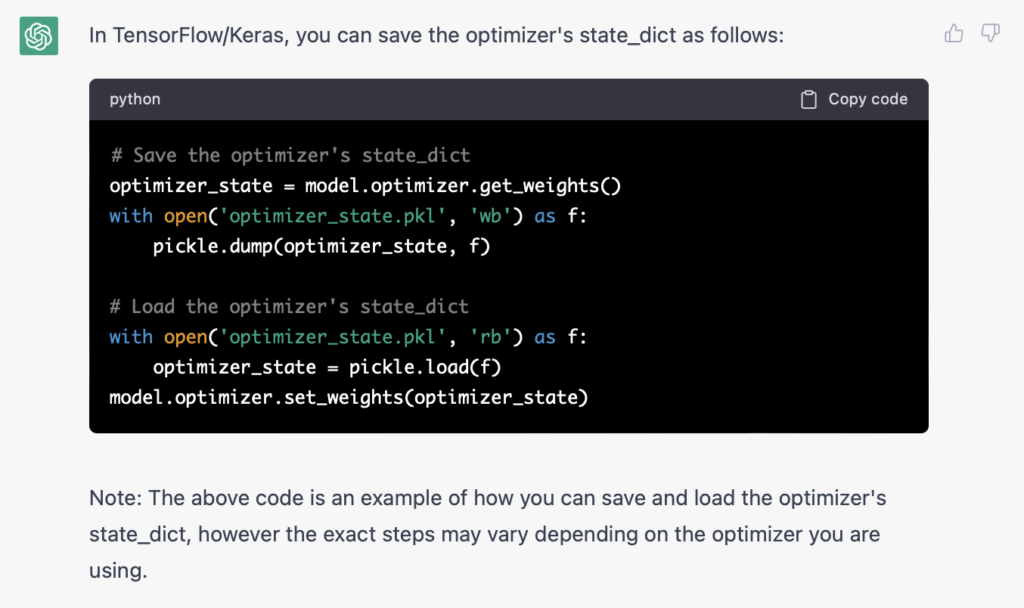

The result looks much closer to what you want. In our case, when all training takes place on Tensorflow, we will do the last iteration:

- “Yes, the same but in tensorflow/keras”

Exactly what was needed!

- Detailed Requests

ChatGPT uses most of the keywords you give it. The more precise the request and the more context it contains, the more likely it is to get a quality answer. This method can be great when you understand the desired result and how to get it.

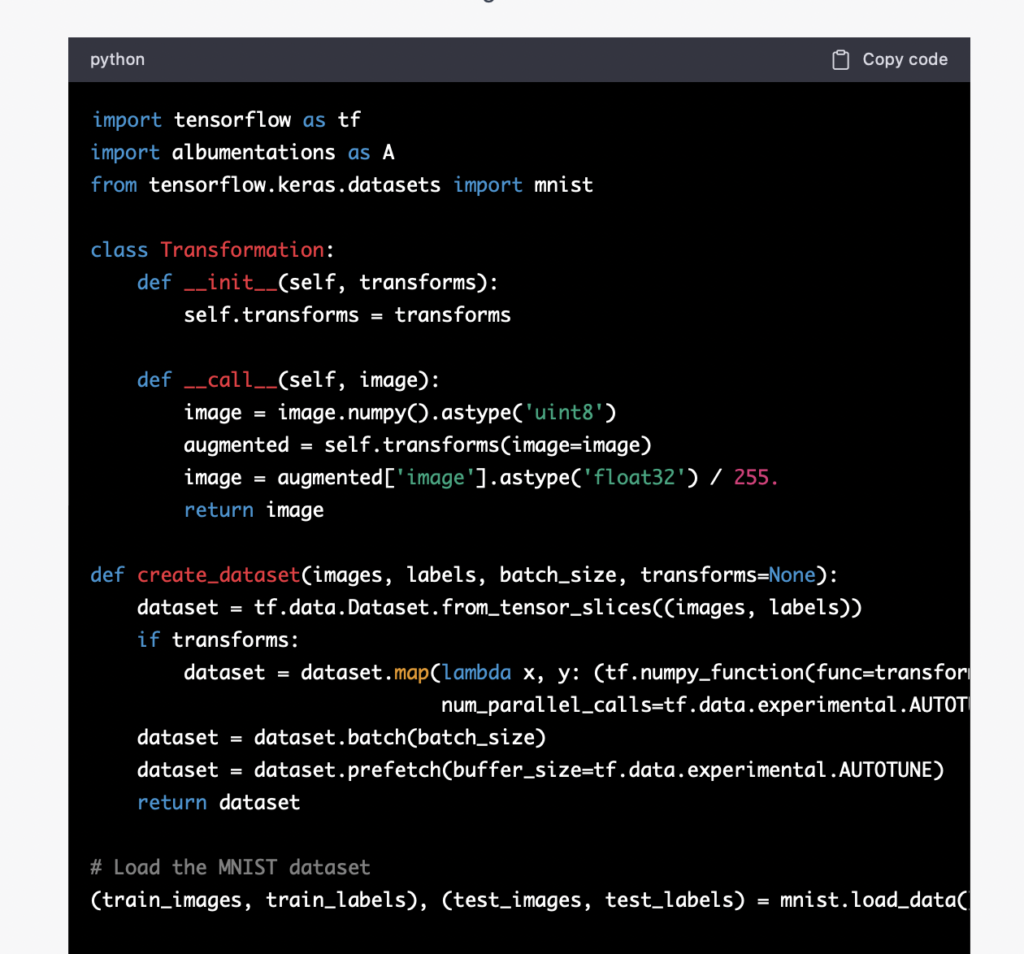

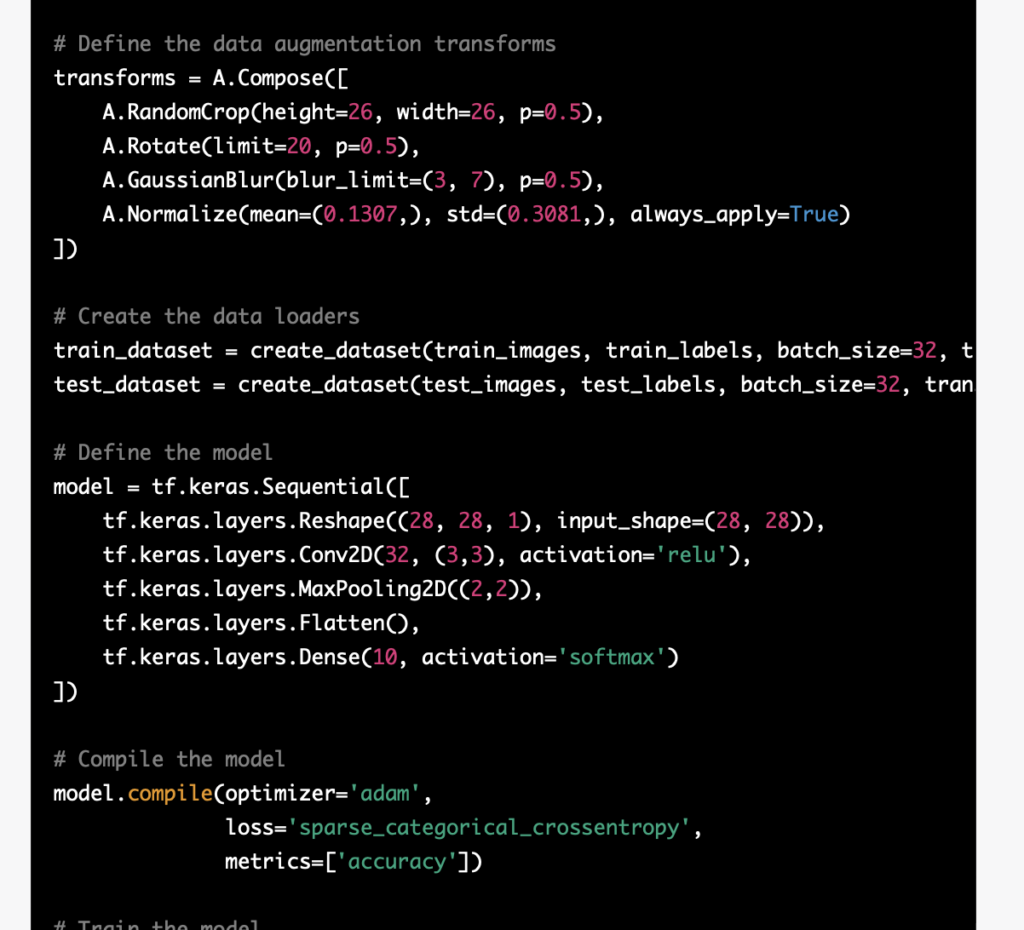

Recall our example of a finished baseline that we got at the beginning. Now we want to add augmentations to the learning process. We proceed from the fact that we know the library we want to use – Albumentations. And also, we know the approximate type of solution and the methods that will be used. In this case, let’s ask the ChatGPT question as specific as possible:

- “Create a tensorflow data loader for image classification task, MNIST as an example. Prepare a class called Transformation that gets a list of albumentations, uses __call__ to process a sample with tf.numpy_function”

We get an excellent wrapper for augmentations and creating a Tensorflow dataset that we can use in real tasks.

- Error correction

Despite the excellent results of ChatGPT with exact queries and with iterative refinement, this neural network quite often produces answers containing fairly obvious errors. Such problems are quite amenable to correction. In some cases, it is enough to generate the issuance again. ChatGPT can give different answers to the same request. Some of them will be better than others. You can also ask directly about what could have gone wrong. By driving an issue error into the chat, ChatGPT pulls up the previous conversation and tries to correct itself in the right direction.

For example, in response to one of the queries we’ve already encountered, ChatGPT returned the following:

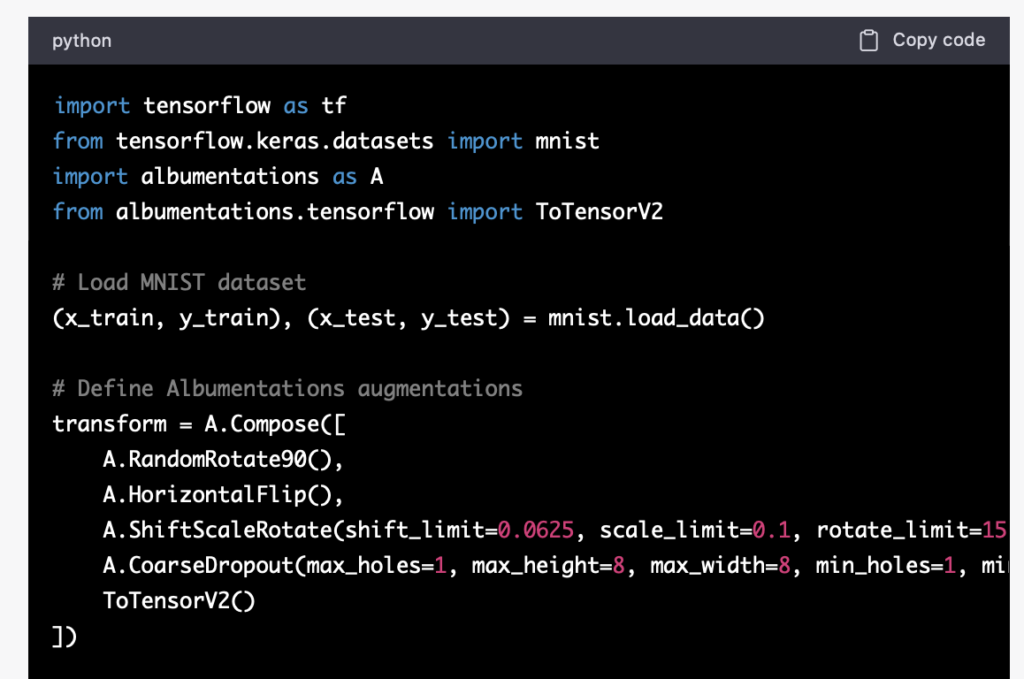

- “Provide an example of how to use albumentations on MNIST dataset with tensorflow”

Here we get the obvious error:

- ModuleNotFoundError: No module named ‘albumentations.tensorflow’

In this case, ChatGPT combined methods from PyTorch and Tensorflow and came up with albumentations.tensorflow.ToTensorV2. By asking the same question, the second time we get a working version without errors.

However, in some cases neither approach works at all.

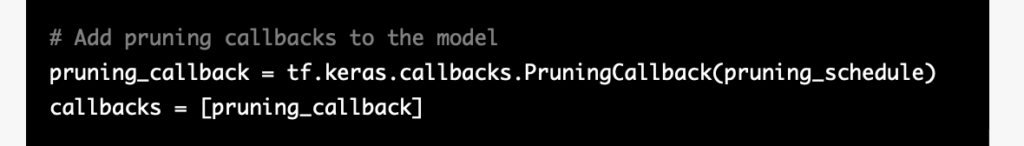

Let’s say we want to optimize a model using the pruning method.

- “Give me a script for pruning a tensorflow keras model”

As in one of the examples, we got code that contains non-existent methods. At the same time, if you try to iteratively improve the answer, you get an endless cycle of errors:

- Q:“tf.keras.callbacks has no PruningCallback”

- A:“My apologies, you are correct. As of my knowledge cutoff (2021), the PruningCallback was not available in the tf.keras.callbacks module. Instead, you can use the tf.keras.mixed_precision module, which provides a pruning API for both weight and activation pruning.”

…

- A:“Sure, here’s an example of pruning a TensorFlow Keras model using the MNIST dataset and tf.keras.optimizers.schedules.PolynomialDecay:”

- Q: (finding that no such arguments exist in the response) I think the arguments in PolynomialDecay are wrong here

- A:“You’re correct, I apologize for the mistake. Here is the corrected version of the code using the correct arguments for tf.keras.optimizers.schedules.PolynomialDecay:”

- Q:“This code gives me this error: RuntimeError: You must compile your model before training/testing. Use `model.compile(optimizer, loss)`.”

- A:“… Here is an updated version of the code to include the compile step:”

- Q:“ValueError: Index out of range using input dim 0; input has only 0 dims for ‘{{node sequential_1/prune_low_magnitude_dense_2/strided_slice}}”

…

Conclusion

In this article, we looked at an example of building the foundations of a machine learning problem, explored several ways to handle ChatGPT to improve search results, and took a look at the errors that ChatGPT can throw, and then learned how to work with them.

Despite the impressive progress of language models, ChatGPT cannot replace humans. She often makes mistakes, gives plausible but false answers, and sometimes she cannot cope with the task at all. However, for a person who feels confident in their field, this tool can be great for improving productivity. Combining the intuition of an experienced specialist and understanding how this neural network works, you can:

- speed up your development;

- get quick ready-made options for prototyping;

- to achieve the correct solution to a complex problem through a series of clarifying queries.

About The Author: Yotec Team

More posts by Yotec Team